Bridging AI and Super-Resolution Microscopy: Highlights from the AI4Life Workshop & Hackathon at SMLMS 2024

Posted by Estibaliz Gómez-de-Mariscal, on 22 July 2025

In the days leading up to the Single Molecule Localization Microscopy Symposium (SMLMS) 2024, AI4Life hosted the Pre-symposium AI4Life Workshop & Hackathon: Trends in AI for Super-Resolution Microscopy at the Instituto Gulbenkian de Ciência (IGC) (now Gulbenkian Institute for Molecular Medicine (GIMM)) in Portugal. The event brought together an interdisciplinary group of leading and early-career researchers to explore the intersection of artificial intelligence and super-resolution microscopy. The programme featured a series of talks, hands-on tutorials, group working sessions, and a round-table discussion, leading to engaging highlights, vibrant discussions, and new research opportunities—summarised below.

Deep Learning Algorithms and Tools for Microscopy Image Analysis

Some of the speakers introduced user-friendly tools to process microscopy images using deep learning. Estibaliz Gómez-de-Mariscal highlighted the BioImage Model Zoo, promoting shared, FAIR-compliant AI models that biologists can access and contribute to. Despite the significant number of models avialble for segmentation, she stressed the little presence of models for super-resolution microscopy and (particle) tracking.

Guillaume Jacquemet and Christophe Spahn showed how their teams apply deep learning in their daily research activities. Jacquemet’s lab combines microfluidics and live-cell imaging to study cancer cell extravasation. They use image analysis platforms such as ZeroCostDL4Mic and CellTracksColab to determine how cells interact with the endothelial cell barrier. Spahn’s group applies supervised deep learning with DeepBacs to enhance image resolution, as widefield images do not provide enough features for robust chromosome visualisation in microbiology. These image processing tasks let his team address imaging technical limitations such as the motion blur created during stack acquisition.

Tayla Shakespeare shared her experience analysing the cargo sorting at the endosome membrane. They use expansion microscopy and 3D cluster segmentation with machine learning in Ilastik. She discussed some positive aspects of using machine learning (e.g., lack of human bias and efficient segmentation) and its limitations regarding scarce training data and high 3D feature variability. Arrate Muñoz-Barrutia provided a retrospective on methods development and achieved capacities in research. She compared the early reconstruction of mammary glands with new combined cellular and tissue-level quantification of entire tumoral biopsies. Their recent InteprolAI applies generative AI for slow-motion video interpolation to reconstruct volumetric human tumoral biopsies.

AI-Powered Image Acquisition and Hardware Innovations in Super-Resolution Microscopy

Clément Cabriel introduced event-based sensors, which asynchronously capture signal variations at the pixel level. This novel technique enables faster and more efficient SMLM data acquisition, and its analysis is possible with EVE-software, a Python-based tool developed by the team. Clément highlighted the lack of AI methods for particle tracking and architectures to work with such sparse events. He also stressed that most data within these sparse lists is meaningful, asynchronous, and there already exist SMLM data simulators, which could be an advantage to train machine learning models.

Nour Mohammad Alsamsam presented miEye, a cost-effective microscope designed for high-resolution wide-field fluorescence imaging and equipped with MicroEye 2.2.0 environment. MicroEye encapsulates the hardware (miEye 2.0), image analysis pipelines such as drift correction, a pycromanager-based interface to interact with the hardware, and an “out of focus” module, which has a graphical user interface, and a chatbot that users can interact with.

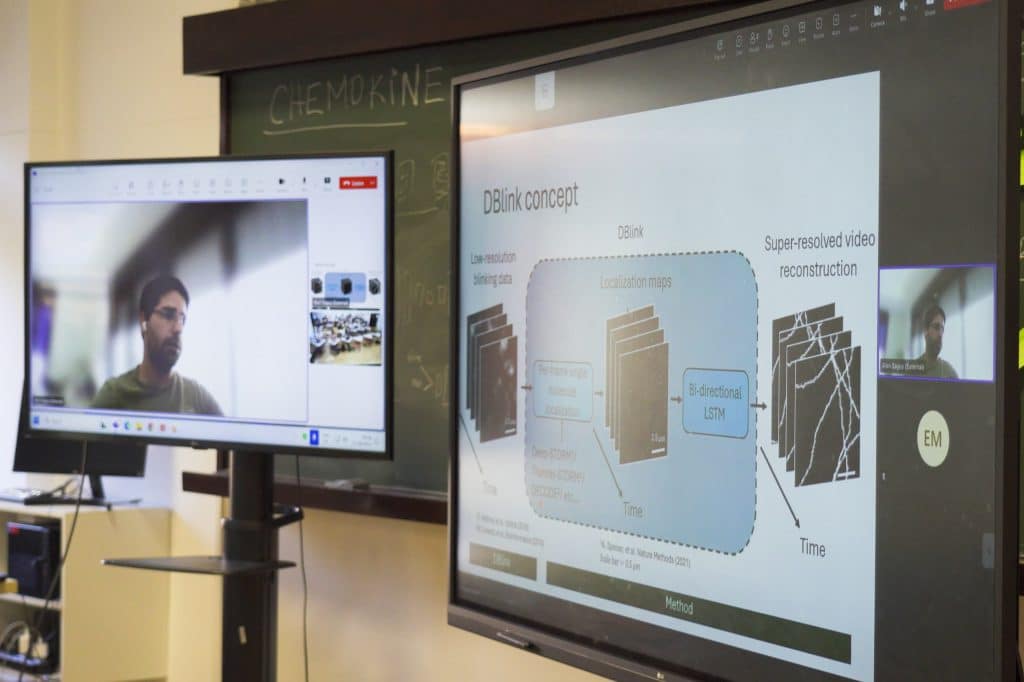

Cecilia Zaza showed how they combine spinning disc confocal microscopy with optical photon reassignment (SDC-OPR) and DNA-PAINT, achieving sub-10nm resolution through multiple cellular layers, enhancing spatial resolution while remaining practical and accessible for diverse biological applications. Alon Saguy presented DBlink, a deep learning method enabling high spatiotemporal video resolution by reconstructing SMLM image data.

Generative and Data-Driven AI Models for Synthetic Data and Simulation

Nils Mechtel introduced some of the challenges they are addressing while building a virtual cell: handling massive datasets and computational resources that allow deploying AI models in parallel. He showcased BioImage.IO Colab, a crowdsourcing image annotation tool that leverages the BioEngine and computational infrastructure built at AICell Lab to create training data collaboratively. Saguy presented their last work using diffusion models to generate realistic synthetic super-resolution microscopy images. Hyoungjun (Peter) Park introduced an unsupervised CycleGAN model improving axial resolution in volumetric microscopy, relaxing imaging constraints on light exposure and acquisition speed.

Highlights and identified needs in the field

We identified sub-fields and tasks for which deep learning methods are still missing or limited by the intrinsic characteristics of the acquisition and the data itself:

- Particle tracking: A lack of efficient methods and annotated data for particle tracking was identified (e.g., AnDi Challenge). Diverging from cell tracking, in particle tracking, there exist different diffusion regimes, motion blur caused by astigmatism, high localisation uncertainty, etc.

- Point cloud analysis for (3D) SMLM: In SMLM image generation, hundreds of localisations are resolved normally. Therefore, a common data format is a list of localisations rather than an image or a video. Yet, there are only a few deep learning architectures designed to learn and process this format.

- Super-resolution clustering methods for particle identification and analysis in 2D and 3D: New microscopy techniques such as expansion microscopy and DNA-PAINT allow the observation of protein conglomerates and distribution within cells and tissues, but their quantification is limited by the lack of enough training data, uncertainty when manually annotating images, and high uncertainty in the structures observed.

Other questions raised by participants involved results validation and assessment (e.g., how can I determine if the data has enough information/features to learn a certain task?), as well as the definition of new metrics to benchmark image denoising and restoration tasks.

Round table discussion

To close the event, we had the pleasure to discuss with Ricardo Henriques, Guillaume Jacquemet, Arrate Muñoz-Barrutia, and Ignacio Izeddin about the topics and matters that were rised during the workshop. They highlighted the overwhelming number of AI models and methods available, with insufficient benchmarks to guide users in selecting the best model. Arrate suggested that AI-powered chatbots (e.g., BioImageIO chatbot) could help navigate this complexity, but stressed that better documentation and standardized model descriptions are essential for this approach to be effective.

They also acknowledged that developing AI methods without ground truth is challenging; cross-validation and simulations can guide progress, but biological relevance should remain the primary validation criterion, accepting inherent limitations. One of them is data quality, which often depends on the acquisition process and may require a cleaning step. If data is not adequately prepared for training, it can negatively impact the model learning process and performance.

A take-home message was that “the results of deep learning are not always black or white. Predictions are not entirely good or bad—they may offer improvements but come at the cost of losing certain features. As a user, you must carefully evaluate whether these trade-offs are acceptable for your application”.

Guillaume emphasized that skepticism is healthy to ensure rigorous validation. This caution potentially forces researchers to publish the data alongside results, benefiting the community with improved transparency and data-sharing, and improving the overall assessment of AI methods. Indeed, while biological relevance is specific to individual conditions, AI’s ability to integrate diverse data types and standardize results is a major advantage for biological applications.

On data sharing and federated learning, the table supported wider access to diverse datasets from multiple labs to improve model robustness and generalization. Ricardo explained that seeing images acquired through different procedures would help models learn to adapt to various conditions, for example. Yet, they noted challenges in managing large heterogeneous datasets, merging different conditions and identifying potential “data contamination” that could affect model training. recommending clear definitions or separate uploads to allow users to decide on combining data. They highlighted existing comparable projects, such as the Electron Microscopy Data Bank (EMDB), which consolidates electron microscopy datasets.

Large language models (LLMs) could not be missing in this discussion. LLMs were compared as analogous to early calculators: initially banned in schools, but now widely used. Ricardo highlighted their significant potential for everyday use, such as enhancing search tools or generating repetitive code, and as long as the accuracy of the produced content is reviewed. The table also discussed that in biology-related applications, LLMs could be used to annotate biological data, creating a federated system that follows consistent structures, greatly enhancing standardization and efficiency.

Acknowledgments

This post summarizes the collective insights and contributions of all workshop participants, with special thanks to the organizers, Iván Hidalgo Cenalmor, Mariana G Ferreira and Inês Geraldes, who made it possible to enjoy a highly exciting and dynamic environment, and collected all the information presented here. Thanks also to SMLMS 2024 organizing committee, Ricardo Henriques and Pedro M Pereira, who made this event possible, AI4Life, and the presenters who shared their expertise and vision for the future of AI in super-resolution microscopy.

(1 votes, average: 1.00 out of 1)

(1 votes, average: 1.00 out of 1)